The main difference between multispectral and hyperspectral is the number of bands and how narrow the bands are.

Multispectral imagery generally refers to 3 to 10 bands. Each band has a descriptive title.

Today, we will explore the differences between these types of imagery.

We also hope to provide you with an intuition about the EM spectrum and the different types of sensors with these capabilities.

What are the Differences Between Multispectral and Hyperspectral Imagery?

The main difference between multispectral and hyperspectral is the number and the spectra of electromagnetic radiation that each band contains.

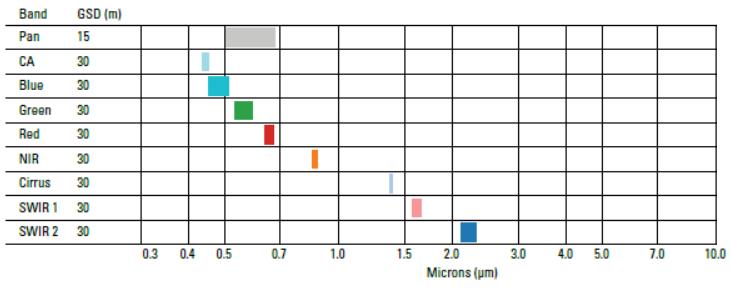

For example, the channels below include red, green, blue, near-infrared, and short-wave infrared.

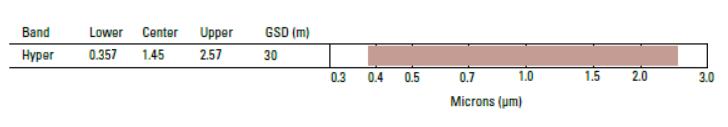

Hyperspectral imagery consists of much narrower bands (10-20 nm). A hyperspectral image could have hundreds or thousands of bands. In general, they don’t have descriptive channel names.

What is Multispectral Imagery?

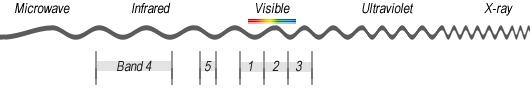

An example of a multispectral sensor is Landsat-8. For example, Landsat-8 produces 11 images with the following bands:

- COASTAL AEROSOL in band 1 (0.43-0.45 um)

- BLUE in band 2 (0.45-0.51 um)

- GREEN in band 3 (0.53-0.59 um)

- RED in band 4 (0.64-0.67 um)

- NEAR INFRARED (NIR) in band 5 (0.85-0.88 um)

- SHORT-WAVE INFRARED (SWIR 1) in band 6 (1.57-1.65 um)

- SHORT-WAVE INFRARED (SWIR 2) in band 7 (2.11-2.29 um)

- PANCHROMATIC in band 8 (0.50-0.68 um)

- CIRRUS in band 9 (1.36-1.38 um)

- THERMAL INFRARED (TIRS 1) in band 10 (10.60-11.19 um)

- THERMAL INFRARED (TIRS 2) in band 11 (11.50-12.51 um)

Each band has a spatial resolution of 30 meters except for bands 8, 10, and 11. While band 8 has a spatial resolution of 15 meters, bands 10 and 11 have a 100-meter pixel size. Because the atmosphere absorbs lights in these wavelengths, there is no band in the 0.88-1.36 range.

What is Hyperspectral Imagery?

In 1994, NASA planned the first hyperspectral satellite called the TRW Lewis. Unfortunately, NASA lost contact with it shortly after its launch.

But later NASA did have a successful launch mission. In 2000, NASA launched the EO-1 satellite which carried the hyperspectral sensor “Hyperion”. In fact, the Hyperion imaging spectrometer (part of the EO-1 satellite) was the first hyperspectral sensor from space.

Hyperion produces 30-meter resolution images in 242 spectral bands (0.4-2.5 um). If you want to test out Hyperion imagery for yourself, you can download the data for free on the USGS Earth Explorer.

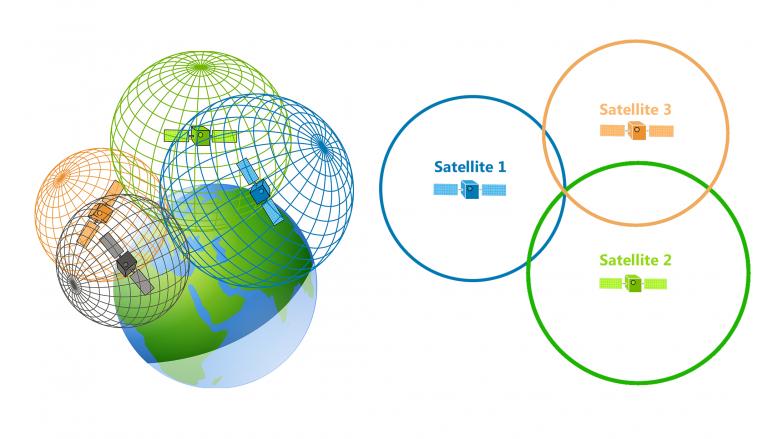

Hyperion really kicked off the start of hyperspectral imaging from space. For example, other hyperspectral imaging missions from space include:

- PROBA-1 (ESA) in 2001

- PRISMA (Italy) in 2019

- EnMap (Germany) in 2020

- HISUI (Japan) in 2020

- HyspIRI (United States) in 2024

An Intuition for Multispectral and Hyperspectral

When you read this post, your eyes see the reflected energy. But a computer sees it in three channels: red, green and blue.

- If you were a goldfish, you would see light differently. A goldfish can see infrared radiation which is invisible to the human eye.

- Bumble bees can see ultraviolet light. Again, humans can’t see ultraviolet radiation from our eyes but UV-B harms us.

Now, imagine if we could view the world in the eyes of a human, goldfish, and bumblebee? Actually, we can. We do this with multispectral and hyperspectral sensors.

Multispectral vs Hyperspectral Imagery

- Multispectral: 3-10 wider bands.

- Hyperspectral: Hundreds of narrow bands.

The Electromagnetic Spectrum

Visible (red, green, and blue), infrared and ultraviolet are descriptive regions in the electromagnetic spectrum. We, humans made up these regions for our own purpose – to conveniently classify them. Each region is categorized based on its frequency (v).

- Humans see visible light (380 nm to 700 nm)

- And goldfish see infrared (700 nm to 1mm)

- Bumble bees see ultraviolet (10 nm to 380 nm)

Multispectral and hyperspectral imagery give the power to see like humans (red, green, and blue), goldfish (infrared), and bumblebees (ultraviolet). Actually, we can see even more than this as reflected EM radiation to the sensor.

Summary: Multispectral vs Hyperspectral

Having a higher level of spectral detail in hyperspectral images gives the better capability to see the unseen. For example, hyperspectral remote sensing distinguished between 3 minerals because of their high spectral resolution. But the multispectral Landsat Thematic Mapper could not distinguish between the 3 minerals.

But one of the downfalls is that it adds a level of complexity. If you have 200 narrow bands to work with, how can you reduce redundancy between channels?

Hyperspectral and multispectral images have many real-world applications. For example, we use hyperspectral imagery to map invasive species and help in mineral exploration.

There are hundreds more applications where multispectral and hyperspectral enable us to understand the world. For example, we use it in the fields of agriculture, ecology, oil and gas, atmospheric studies, and more.